「PythonとJavaScriptではじめるデータビジュアライゼーション」を読む

6.6.1 ページのキャシュ

setting.pyのHTTPCACHE_ENABLED=True行をコメントアウト

6.6.2リクエストの作成

import scrapy

import re

BASE_URL = 'http://en.wikipedia.org'

class NWinnerItem(scrapy.Item):

name = scrapy.Field()

link = scrapy.Field()

year = scrapy.Field()

category = scrapy.Field()

country = scrapy.Field()

gender = scrapy.Field()

born_in = scrapy.Field()

date_of_birth = scrapy.Field()

date_of_death = scrapy.Field()

place_of_birth = scrapy.Field()

place_of_death = scrapy.Field()

text = scrapy.Field()

class NWinnerSpider(scrapy.Spider):

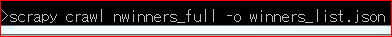

name = 'nwinners_full'

allowed_domains = ['en.wikipedia.org']

start_urls = [

"http://en.wikipedia.org/wiki/List_of_Nobel_laureates_by_country"

]

def parse(self, response):

filename = response.url.split('/')[-1]

h2s = response.xpath('//h2')

for h2 in h2s[2:]: #ここは2つ飛ばす

country = h2.xpath('span[@class="mw-headline"]/text()').extract()

if country:

winners = h2.xpath('following-sibling::ol[1]')

for w in winners.xpath('li'):

#このwはhttps://en.wikipedia.org/wiki/List_of_Nobel_laureates_by_country内を探して

#国ごとの下に並んでいる受賞者の情報を取得している

#このようなリスト -> <Selector xpath='li' data='<li><a href="/wiki/Bertha_von_Suttner" t'>

#このデータをprocess_winner_li関数に渡して

#wdata['link']には人物情報ページへの絶対アドレス

#wdata['name']には受賞者の名前

#wdata['year']受賞年

#wdata['category']受賞部門

#wdata['country'],wdata['born'] 国籍

wdata = process_winner_li(w, country[0])

#Requestクラスに渡した人物情報URLをself.parse_bioメソッドでクロールする

request = scrapy.Request(wdata['link'], callback=self.parse_bio, dont_filter=True)

request.meta['item'] = NWinnerItem(**wdata)

yield request

def parse_bio(self, response):

#引数のresponseはscrapy.http.response.html.HtmlResponseクラス

item = response.meta['item']

#hrefは各人物ごとのWikiDataのページのURL

href = response.xpath("//li[@id='t-wikibase']/a/@href").extract()

if href:

url = href[0]

#Requestクラスに人物のWikiDataのURLを渡してpasrse_wikidataメソッドでクロールさせる

request = scrapy.Request(url,\

callback=self.parse_wikidata,\

dont_filter=True)

request.meta['item'] = item

yield request

def parse_wikidata(self, response):

print("parse_wikidata\n")

item = response.meta['item']

property_codes = [

{'name':'date_of_birth', 'code':'P569'},

{'name':'date_of_death', 'code':'P570'},

{'name':'place_of_birth', 'code':'P19', 'link':True},

{'name':'place_of_death', 'code':'P20', 'link':True},

{'name':'gender', 'code':'P21', 'link':True}

]

p_template = '//*[@id="{code}"]/div[2]/div/div/div[2]' \

'/div[1]/div/div[2]/div[2]{link_html}/text()'

#p569は生誕日、p570没日、p19生まれた国、p20没した国、p21性別

for prop in property_codes:

link_html = ''

if prop.get('link'):

link_html = '/a'

sel = response.xpath(p_template.format(\

code=prop['code'], link_html=link_html))

if sel:

item[prop['name']] = sel[0].extract()

yield item

def process_winner_li(w, country=None):

"""

Process a winner's <li> tag, adding country of birth or nationality,

as applicable.

"""

wdata = {}

wdata['link'] = BASE_URL + w.xpath('a/@href').extract()[0]

text = ' '.join(w.xpath('descendant-or-self::text()').extract())

#ここで得られるtextは

#'C\xc3\xa9sar Milstein , Physiology or Medicine, 1984'のような情報

wdata['name'] = text.split(',')[0].strip()

year = re.findall('\d{4}', text)

if year:

wdata['year'] = int(year[0])

else:

wdata['year'] = 0

print('Oops, no year in ', text)

category = re.findall(

'Physics|Chemistry|Physiology or Medicine|Literature|Peace|Economics',

text)

if category:

wdata['category'] = category[0]

else:

wdata['category'] = ''

print('Oops, no category in ', text)

#countryに*がついていると受賞時の国が出生国と同じ場合につく

if country:

if text.find('*') != -1:

wdata['country'] = ''

wdata['born_in'] = country

else:

wdata['country'] = country

wdata['born_in'] = ''

# store a copy of the link's text-string for any manual corrections

wdata['text'] = text

return wdata

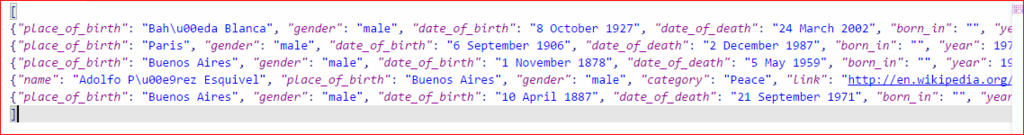

Argentinaだけ採取