jupyter notebookから直接MarkDownファイルに変換してここに貼り付ける方式に変更した

8.4DataFrame

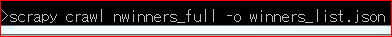

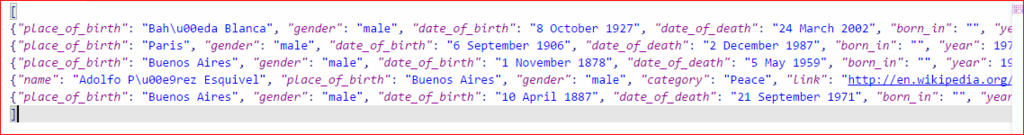

import pandas as pd PATH = "/home/beetle/myproject/DataVisualization/nobel_winners/dataviz-with-python-and-js/Ch06_Heavyweight_Scraping_with_Scrapy/winner_list.json" df = pd.read_json(PATH) df.head(3)

| born_in | category | country | date_of_birth | date_of_death | gender | link | name | place_of_birth | place_of_death | text | year | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | Economics | Austria | 8 May 1899 | 23 March 1992 | male | http://en.wikipedia.org/wiki/Friedrich_Hayek | Friedrich Hayek | Vienna | Freiburg im Breisgau | Friedrich Hayek , Economics, 1974 | 1974 | |

| 1 | Physiology or Medicine | Austria | 7 November 1903 | 27 February 1989 | male | http://en.wikipedia.org/wiki/Konrad_Lorenz | Konrad Lorenz | Vienna | Vienna | Konrad Lorenz , Physiology or Medicine, 1973 | 1973 | |

| 2 | Austria | Physiology or Medicine | 20 November 1886 | 12 June 1982 | male | http://en.wikipedia.org/wiki/Karl_von_Frisch | Karl von Frisch * | Vienna | Munich | Karl von Frisch *, Physiology or Medicine, 1973 | 1973 |

8.4.1インデックス

print(df.columns) print(df.index) df =df.set_index('name') print(df.loc['Albert Einstein']) df = df.reset_index()

Index(['born_in', 'category', 'country', 'date_of_birth', 'date_of_death',

'gender', 'link', 'name', 'place_of_birth', 'place_of_death', 'text',

'year'],

dtype='object')

Int64Index([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9,

...

1048, 1049, 1050, 1051, 1052, 1053, 1054, 1055, 1056, 1057],

dtype='int64', length=1058)

born_in category country date_of_birth date_of_death \

name

Albert Einstein Physics Switzerland 14 March 1879 18 April 1955

Albert Einstein Physics Germany 14 March 1879 18 April 1955

gender link \

name

Albert Einstein male http://en.wikipedia.org/wiki/Albert_Einstein

Albert Einstein male http://en.wikipedia.org/wiki/Albert_Einstein

place_of_birth place_of_death \

name

Albert Einstein Ulm Princeton

Albert Einstein Ulm Princeton

text year

name

Albert Einstein Albert Einstein , born in Germany , Physics, ... 1921

Albert Einstein Albert Einstein , Physics, 1921 1921

8.4.2 行と列

print(df.iloc[2]) print(df.ix[2]) df = df.set_index('name') print(df.ix['Albert Einstein']) print(df.ix[2])

name Karl von Frisch *

born_in Austria

category Physiology or Medicine

country

date_of_birth 20 November 1886

date_of_death 12 June 1982

gender male

link http://en.wikipedia.org/wiki/Karl_von_Frisch

place_of_birth Vienna

place_of_death Munich

text Karl von Frisch *, Physiology or Medicine, 1973

year 1973

Name: 2, dtype: object

name Karl von Frisch *

born_in Austria

category Physiology or Medicine

country

date_of_birth 20 November 1886

date_of_death 12 June 1982

gender male

link http://en.wikipedia.org/wiki/Karl_von_Frisch

place_of_birth Vienna

place_of_death Munich

text Karl von Frisch *, Physiology or Medicine, 1973

year 1973

Name: 2, dtype: object

born_in category country date_of_birth date_of_death \

name

Albert Einstein Physics Switzerland 14 March 1879 18 April 1955

Albert Einstein Physics Germany 14 March 1879 18 April 1955

gender link \

name

Albert Einstein male http://en.wikipedia.org/wiki/Albert_Einstein

Albert Einstein male http://en.wikipedia.org/wiki/Albert_Einstein

place_of_birth place_of_death \

name

Albert Einstein Ulm Princeton

Albert Einstein Ulm Princeton

text year

name

Albert Einstein Albert Einstein , born in Germany , Physics, ... 1921

Albert Einstein Albert Einstein , Physics, 1921 1921

born_in Austria

category Physiology or Medicine

country

date_of_birth 20 November 1886

date_of_death 12 June 1982

gender male

link http://en.wikipedia.org/wiki/Karl_von_Frisch

place_of_birth Vienna

place_of_death Munich

text Karl von Frisch *, Physiology or Medicine, 1973

year 1973

Name: Karl von Frisch *, dtype: object

/home/beetle/anaconda3/lib/python3.6/site-packages/ipykernel_launcher.py:2: DeprecationWarning:

.ix is deprecated. Please use

.loc for label based indexing or

.iloc for positional indexing

See the documentation here:

http://pandas.pydata.org/pandas-docs/stable/indexing.html#deprecate_ix

/home/beetle/anaconda3/lib/python3.6/site-packages/ipykernel_launcher.py:4: DeprecationWarning:

.ix is deprecated. Please use

.loc for label based indexing or

.iloc for positional indexing

See the documentation here:

http://pandas.pydata.org/pandas-docs/stable/indexing.html#deprecate_ix

after removing the cwd from sys.path.

8.4.3グループ

df = df.groupby('category') print(df.groups.keys()) #物理学受賞者のみピックアップ phy_group = df.get_group('Physics') print(phy_group.head(3))

dict_keys(['', 'Chemistry', 'Economics', 'Literature', 'Peace', 'Physics', 'Physiology or Medicine'])

born_in category country date_of_birth \

name

Brian Schmidt Physics Australia 24 February 1967

Percy W. Bridgman Physics United States 21 April 1882

Isidor Isaac Rabi Physics United States 29 July 1898

date_of_death gender \

name

Brian Schmidt NaN male

Percy W. Bridgman 20 August 1961 male

Isidor Isaac Rabi 11 January 1988 male

link \

name

Brian Schmidt http://en.wikipedia.org/wiki/Brian_Schmidt

Percy W. Bridgman http://en.wikipedia.org/wiki/Percy_W._Bridgman

Isidor Isaac Rabi http://en.wikipedia.org/wiki/Isidor_Isaac_Rabi

place_of_birth place_of_death \

name

Brian Schmidt Missoula NaN

Percy W. Bridgman Cambridge Randolph

Isidor Isaac Rabi Rymanów New York City

text year

name

Brian Schmidt Brian Schmidt , born in the United States , P... 2011

Percy W. Bridgman Percy W. Bridgman , Physics, 1946 1946

Isidor Isaac Rabi Isidor Isaac Rabi , born in Austria , Physics... 1944