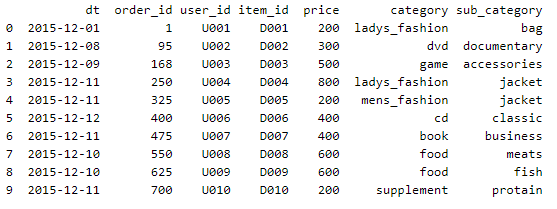

5章 ユーザーを把握するためのデータ抽出

5-1 ユーザー全体の特徴・傾向を見つける

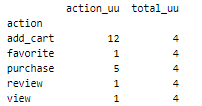

5-1-1 ユーザーのアクション数を集計する

import pandas as pd

import psycopg2

import numpy as np

conn = psycopg2.connect("dbname=BigData host=localhost user=testuser")

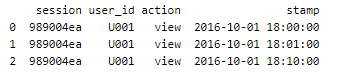

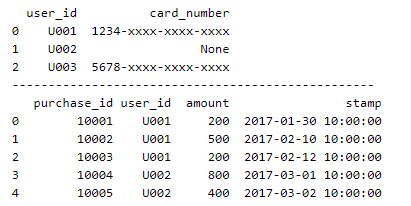

df = pd.read_sql("SELECT * FROM action_log", conn)

print(df)

#わからなくなった

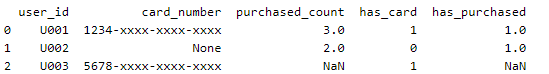

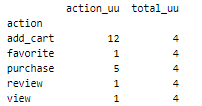

total_uu = df['session'].nunique()

dg1 = df.groupby('action')

df1 = pd.DataFrame({

'action_uu':dg1['session'].count(),

'total_uu':total_uu

}

)

print(df1)

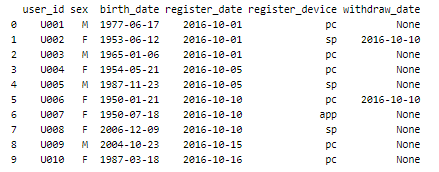

5-1-2 年齢別区分を集計する

import pandas as pd

import psycopg2

import numpy as np

import datetime

import math as m

conn = psycopg2.connect("dbname=BigData host=localhost user=testuser")

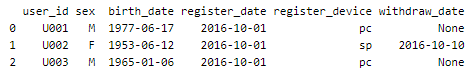

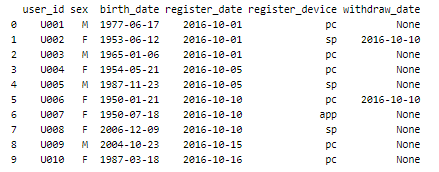

df = pd.read_sql("SELECT * FROM mst_users", conn)

print(df)

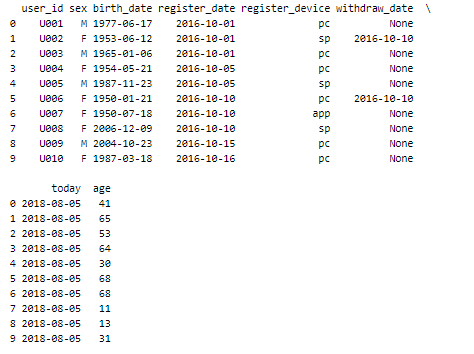

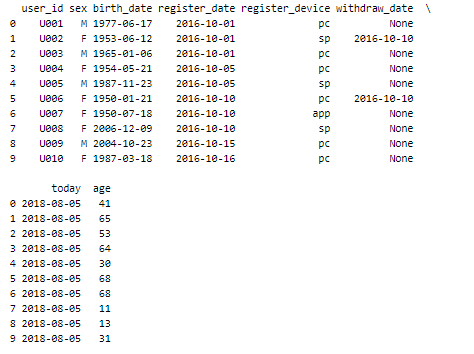

df['birth_date'] = pd.to_datetime(df['birth_date'])

df['today'] = pd.to_datetime(datetime.date.today())

dff = (df['today'] - df['birth_date'])

dff = dff.dt.total_seconds()/3600/24/365

df['age'] = dff.map(m.floor)

print(df)

def age_category(age):

if 4 <= age <= 12:

return 'C'

if 13 <= age <= 19:

return 'T'

if 20 <= age <= 34:

return '1'

if 35 <= age <= 49:

return '2'

if 50 <= age:

return '3'

def sex_cut(x):

if 'C' in x:

x = 'C'

if 'T' in x:

x = 'T'

return x

df['age_category'] = df['age'].map(age_category)

df['category'] = df['sex'] + df['age_category']

df['category'] = df['category'].map(sex_cut)

print(df[['user_id','sex','age','category']])