6.8パイプラインを使ったテキストと画像のスクレイピング

#nwinners_minbio_spider.py

import scrapy

import re

BASE_URL = 'http://en.wikipedia.org'

class NWinnerItemBio(scrapy.Item):

link = scrapy.Field()

name = scrapy.Field() #このnameフィールドは使っていない?

mini_bio = scrapy.Field()

image_urls = scrapy.Field()

bio_image = scrapy.Field() #このbio_imageは使っていない?

images = scrapy.Field() #このimagesは使っていない

class NWinnerSpiderBio(scrapy.Spider):

""" Scrapes the Nobel prize biography pages for portrait images and a biographical snippet """

name = 'nwinners_minibio'

allowed_domains = ['en.wikipedia.org']

start_urls = [

#"http://en.wikipedia.org/wiki/List_of_Nobel_laureates_by_country"

"http://en.wikipedia.org/wiki/List_of_Nobel_laureates_by_country?dfdfd"

]

#For Scrapy v 1.0+, custom_settings can override the item pipelines in settings

custom_settings = {

'ITEM_PIPELINES': {'nobel_winners.pipelines.NobelImagesPipeline':1},

}

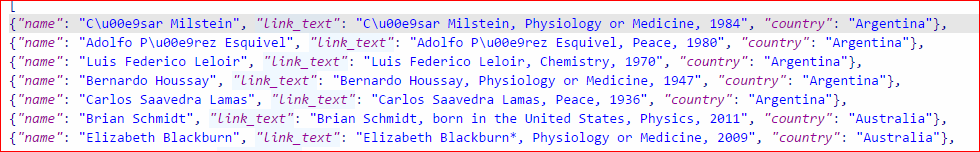

def parse(self, response):

#filename = response.url.split('/')[-1] #このfilenameいらないような

h2s = response.xpath('//h2')

for h2 in h2s[2:]: #2から

country = h2.xpath('span[@class="mw-headline"]/text()').extract()

if country:

winners = h2.xpath('following-sibling::ol[1]')

for w in winners.xpath('li'):

wdata = {}

wdata['link'] = BASE_URL + w.xpath('a/@href').extract()[0]

#print(wdata)

request = scrapy.Request(wdata['link'],

callback=self.get_mini_bio,

dont_filter=True)

request.meta['item'] = NWinnerItemBio(**wdata)

yield request

def get_mini_bio(self, response):

BASE_URL_ESCAPED = 'http:\/\/en.wikipedia.org'

item = response.meta['item']

# cache image

item['image_urls'] = []

# Get the URL of the winner's picture, contained in the infobox table

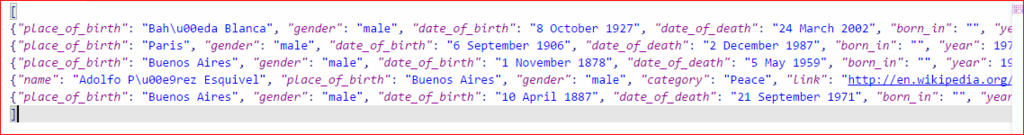

img_src = response.xpath('//table[contains(@class,"infobox")]//img/@src')

if img_src:

item['image_urls'] = ['http:' + img_src[0].extract()]

mini_bio = ''

# Get the paragraphs in the biography's body-text

ps = response.xpath('//*[@id="mw-content-text"]/div/p[text() or normalize-space(.)=""]').extract() #本のとおりだとmini_bioは取得できない、/div/p[text()...とすること

# Add introductory biography paragraphs till the empty breakpoint

for p in ps:

if p == '<p></p>':

break

mini_bio += p

# correct for wiki-links

mini_bio = mini_bio.replace('href="/wiki', 'href="' + BASE_URL + '/wiki')

mini_bio = mini_bio.replace('href="#', 'href="' + item['link'] + '#')

item['mini_bio'] = mini_bio

yield item

#pipelines.py

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: http://doc.scrapy.org/en/latest/topics/item-pipeline.html

import scrapy

from scrapy.contrib.pipeline.images import ImagesPipeline

# For Scrapy v1.0+:

# from scrapy.pipelines.images import ImagesPipeline

from scrapy.exceptions import DropItem

class NobelImagesPipeline(ImagesPipeline):

def get_media_requests(self, item, info):

for image_url in item['image_urls']:

yield scrapy.Request(image_url)

def item_completed(self, results, item, info):

if item['image_urls']:

image_paths = item['image_urls'] #[item['image_urls'] for ok, x in results if ok]

if image_paths:

item['bio_image'] = image_paths[0]

return item

class DropNonPersons(object):

""" Remove non-person winners """

def process_item(self, item, spider):

if not item['gender']:

raise DropItem("No gender for %s"%item['name'])

return item

#settings.py

# -*- coding: utf-8 -*-

# Scrapy settings for nobel_winners project

#

# For simplicity, this file contains only the most important settings by

# default. All the other settings are documented here:

#

# http://doc.scrapy.org/en/latest/topics/settings.html

#

import os

BOT_NAME = 'nobel_winners'

SPIDER_MODULES = ['nobel_winners.spiders']

NEWSPIDER_MODULE = 'nobel_winners.spiders'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

#USER_AGENT = 'nobel_winners (+http://www.yourdomain.com)'

# e.g., to 'impersonate' a browser':

#USER_AGENT = "Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/27.0.1453.93 Safari/537.36"

HTTPCACHE_ENABLED = True

# ITEM_PIPELINES = {'scrapy.contrib.pipeline.images.ImagesPipeline': 1}

# We can define the ITEM_PIPELINES here or in their respective spiders by using the

# custom_settings variable (Scrapy v 1.0+) (see the nwinners_minibio spider for an example)

# For earlier versions of Scrapy (<1.0), define the ITEM_PIPELINES variable here:

ITEM_PIPELINES = {'nobel_winners.pipelines.NobelImagesPipeline':1}

#ITEM_PIPELINES = {'nobel_winners.pipelines.DropNonPersons':1}

# We're storing the images in an 'images' subdirectory of the Scrapy project's root

IMAGES_STORE = 'images'

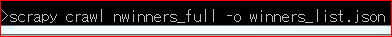

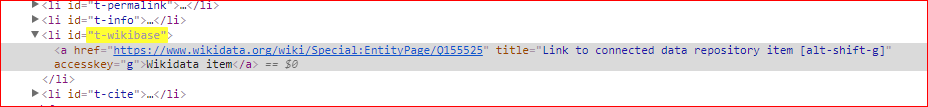

minibios.jsonの内訳

データ数1067

keyはlink,image_urls,mini_bioのみ

本にあるように受賞者の肖像がダウンロードすることはなかった。

もう少し先にかいてあるかもしれないのでここはスルー