EDA for ASHRAEをやってみる

square_feet

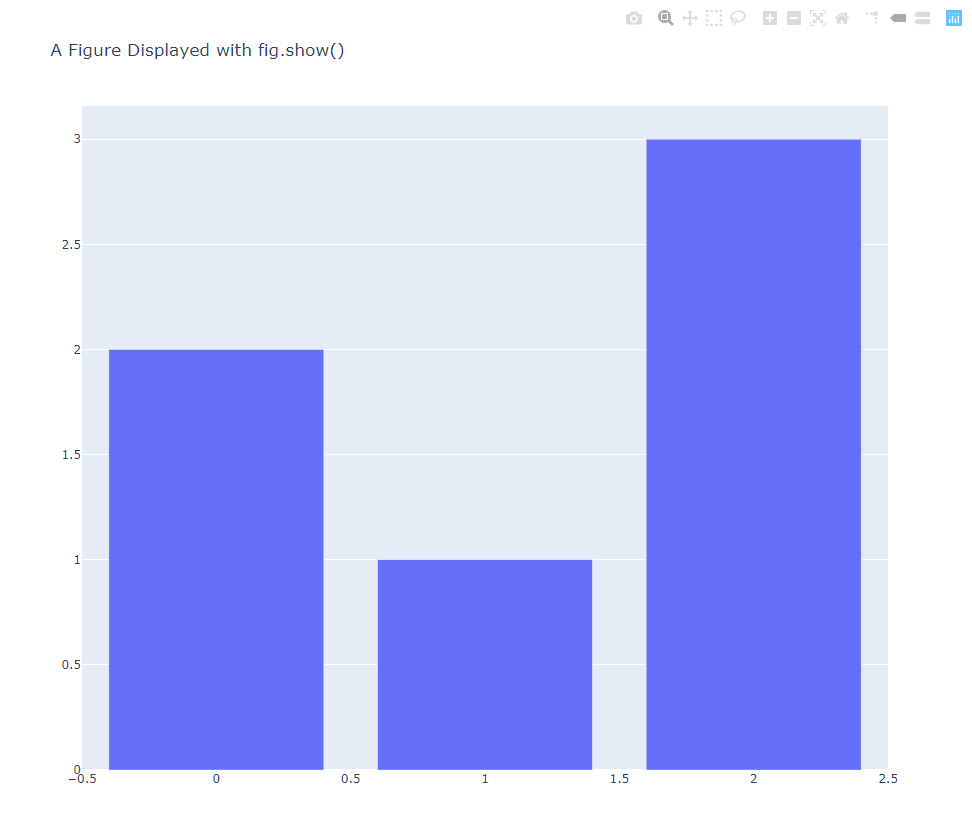

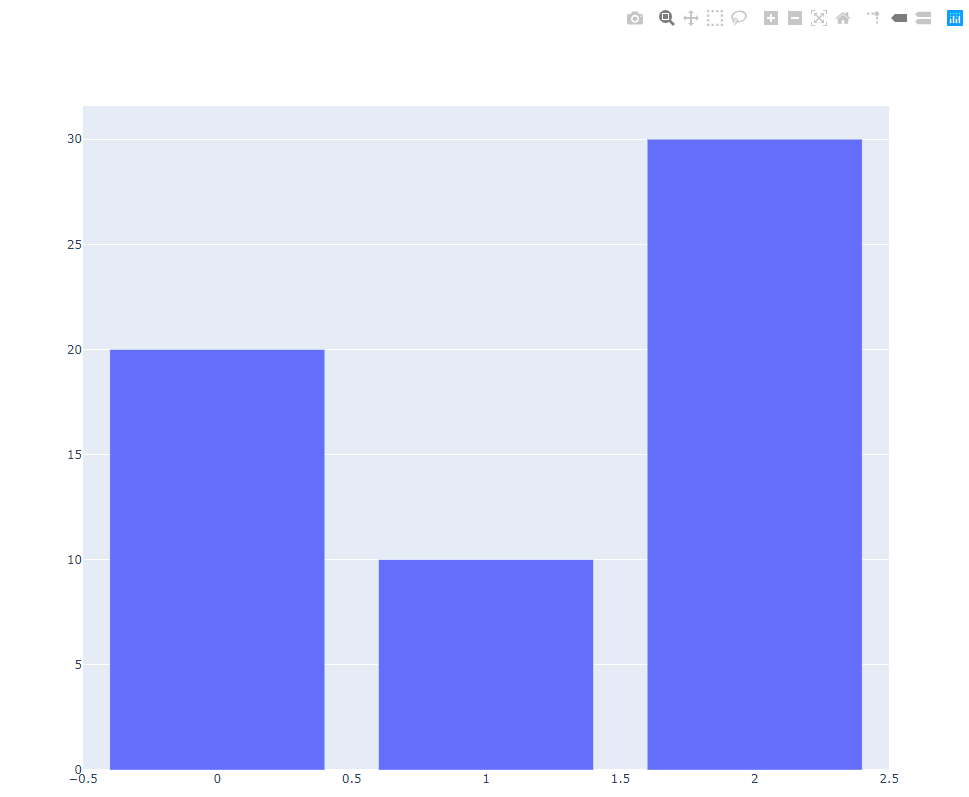

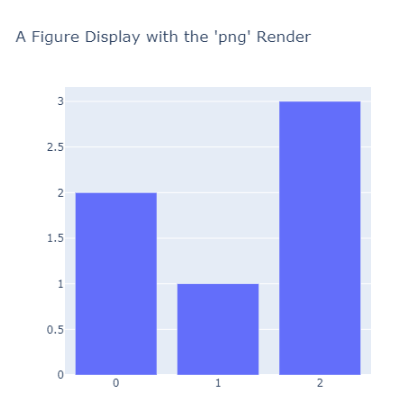

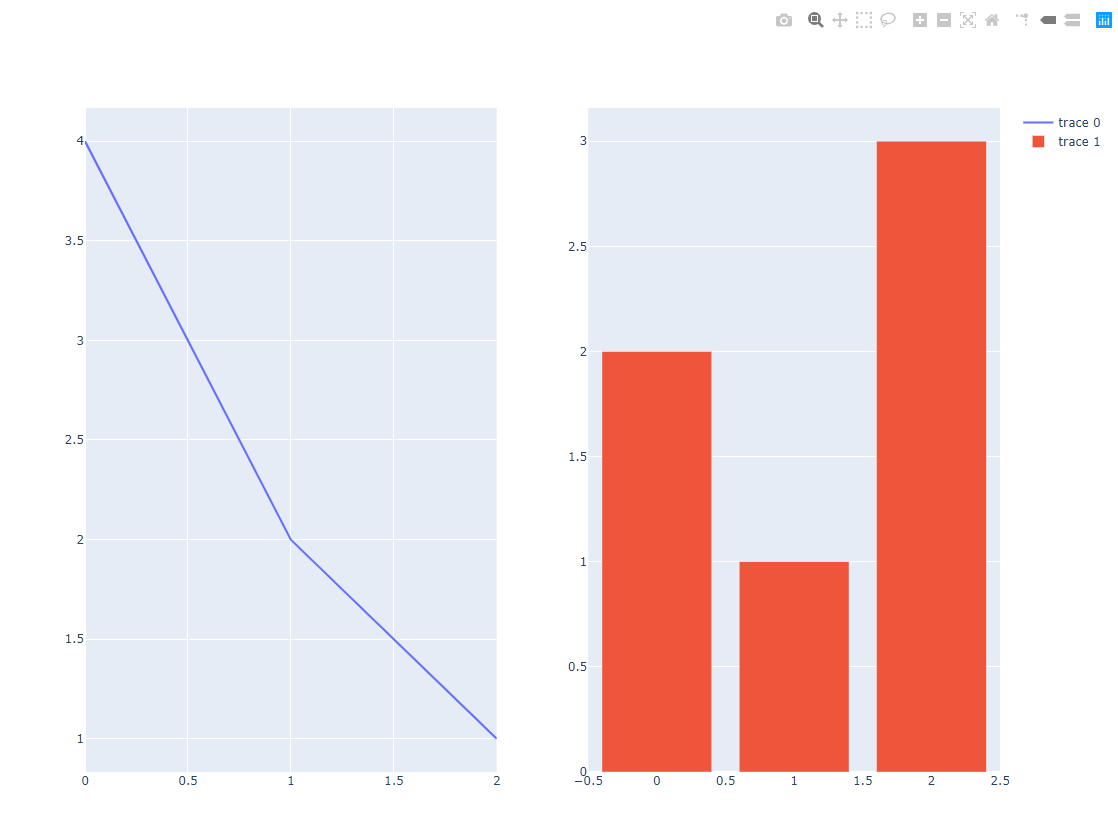

fig, axes = plt.subplots(2, 2, figsize=(14, 12))

# kdeplot カーネル密度

sns.kdeplot(train['square_feet'], ax=axes[0][0], label='Train');

sns.kdeplot(test['square_feet'], ax=axes[0][0], label='Test');

sns.boxplot(x=train['square_feet'], ax=axes[1][0]);

sns.boxplot(x=test['square_feet'], ax=axes[1][1]);

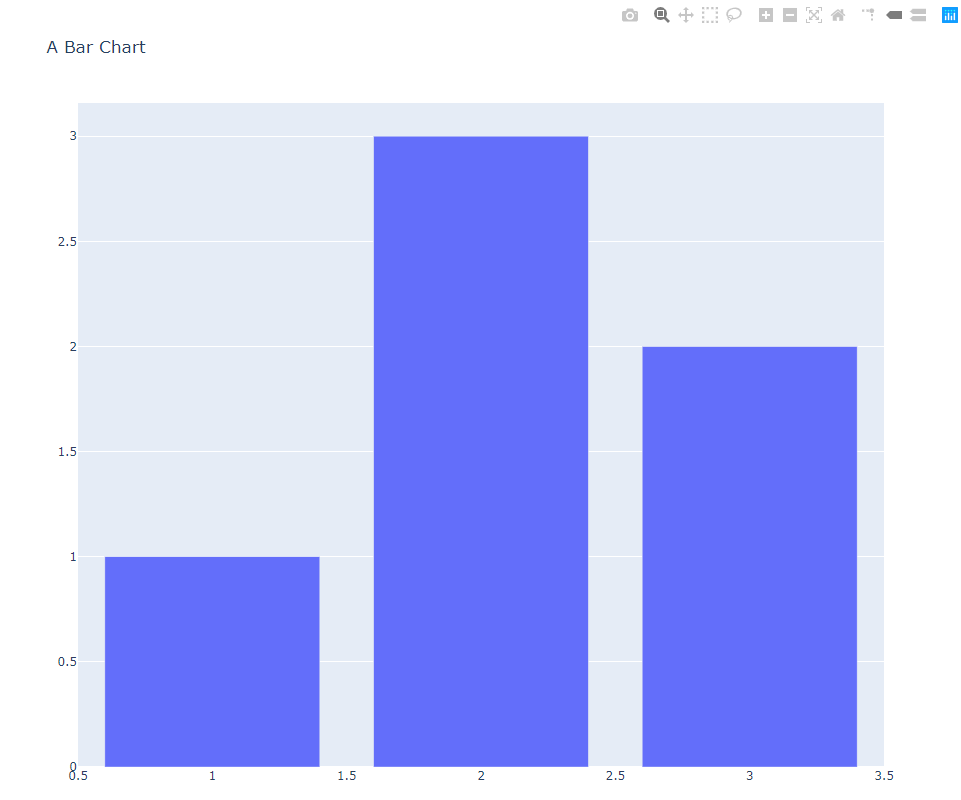

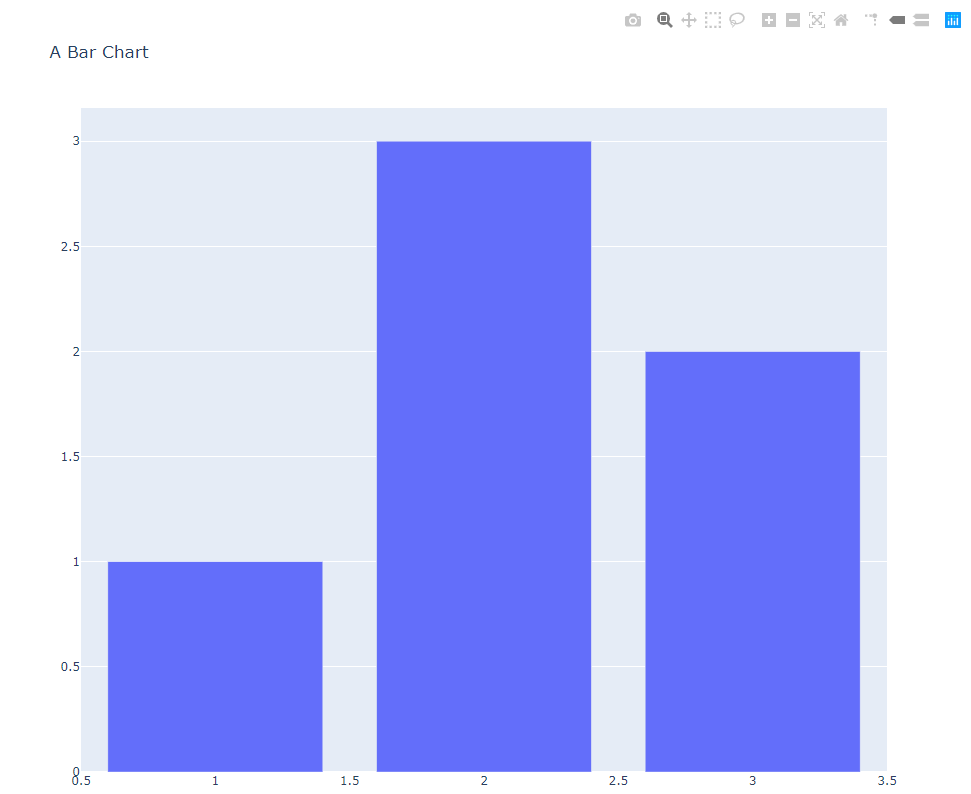

pd.DataFrame({'train': [train['square_feet'].isnull().sum()], 'test': [test['square_feet'].isnull().sum()]}).plot(kind='bar', rot=0, ax=axes[0][1]);

axes[0][0].legend();

axes[0][0].set_title('Train/Test KDE distribution');

axes[0][1].set_title('Number of NaNs');

axes[1][0].set_title('Boxplot for train');

axes[1][1].set_title('Boxplot for test');

gc.collect();

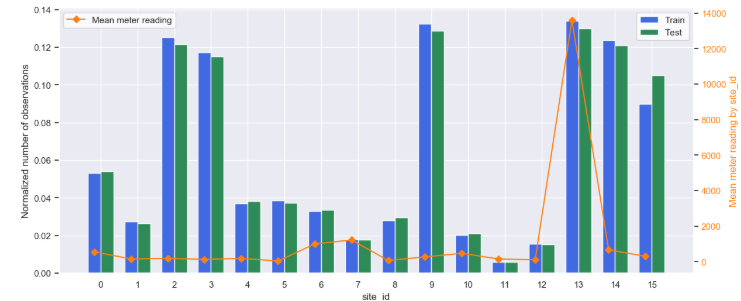

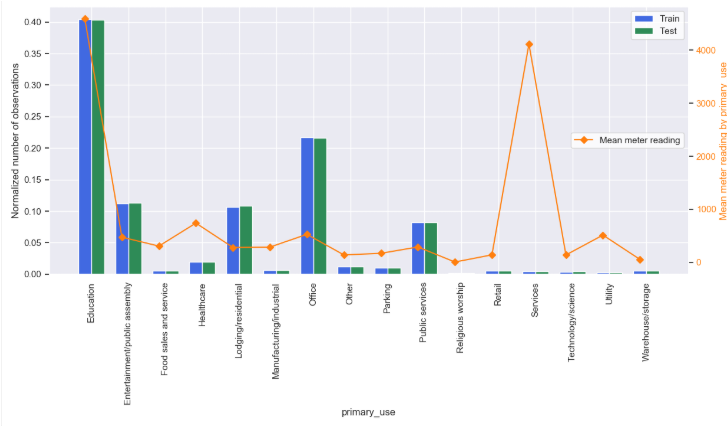

fig, axes = plt.subplots(1, 2, figsize=(14, 6))

train['site_id'].value_counts(dropna=False, normalize=True).sort_index().plot(kind='bar', rot=0, ax=axes[0]).set_xlabel('site_id value');

train[train['building_id']!=1099]['site_id'].value_counts(dropna=False, normalize=True).sort_index().plot(kind='bar', rot=0, ax=axes[1]).set_xlabel('site_id value');

ax2 = axes[0].twinx()

ax3 = axes[1].twinx()

train.groupby('site_id')['meter_reading'].mean().sort_index().plot(ax=ax2, style='D-', grid=False, color='tab:orange');

train[train['building_id']!=1099].groupby('site_id')['meter_reading'].mean().sort_index().plot(ax=ax3, style='D-', grid=False, color='tab:orange');

ax2.set_ylabel('Mean meter reading', color='tab:orange', fontsize=14);

ax3.set_ylabel('Mean meter reading', color='tab:orange', fontsize=14);

ax2.tick_params(axis='y', labelcolor='tab:orange');

ax3.tick_params(axis='y', labelcolor='tab:orange');

plt.subplots_adjust(wspace=0.4)

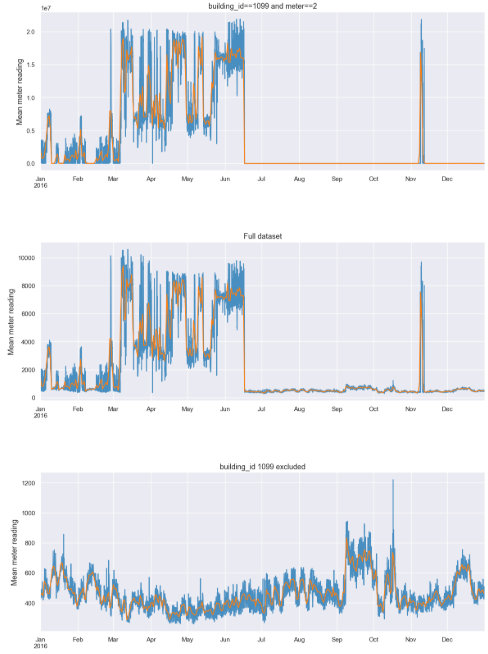

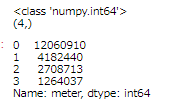

axes[0].set_title('WITH building_id 1099');

axes[1].set_title('WITHOUT building_id 1099');

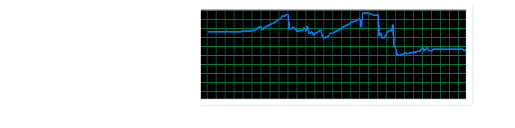

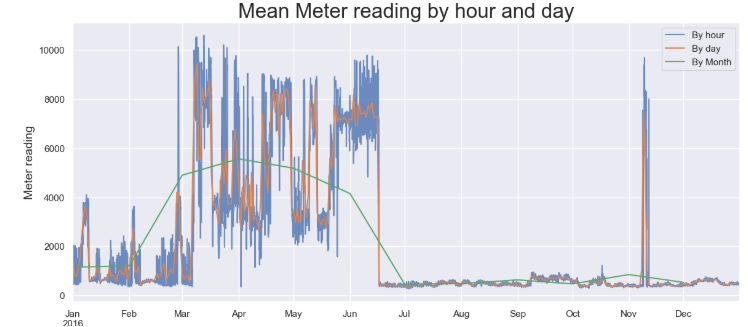

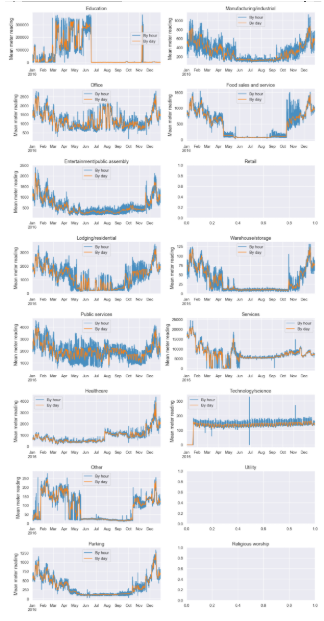

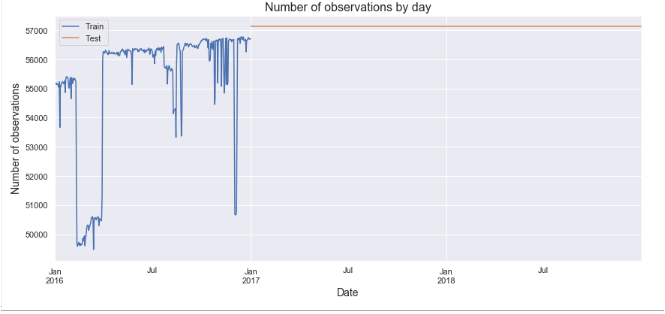

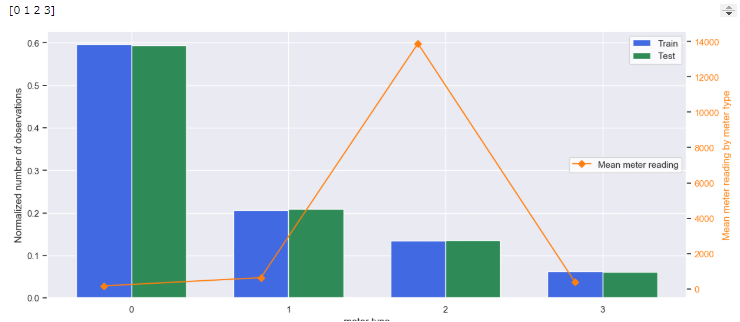

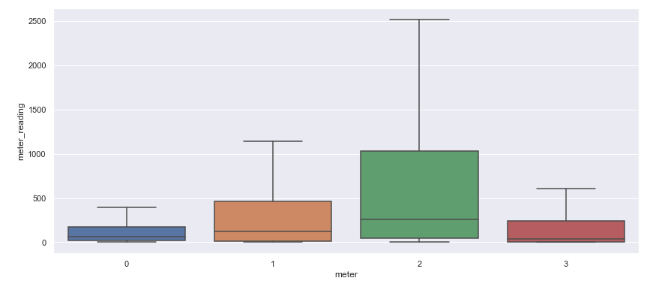

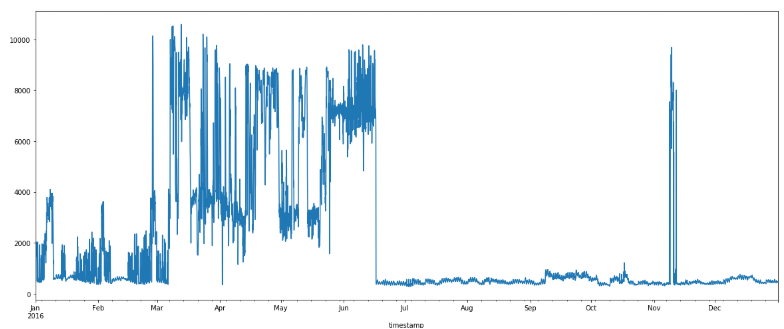

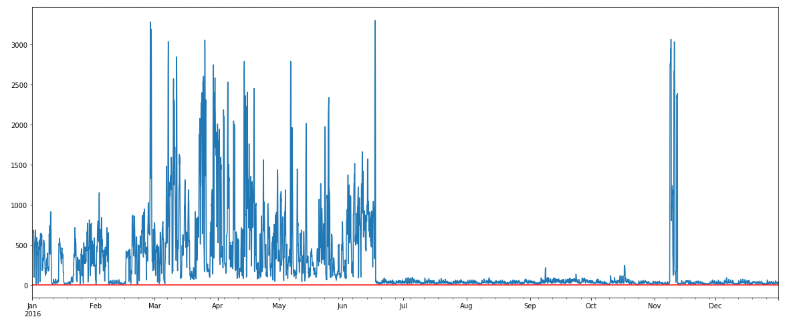

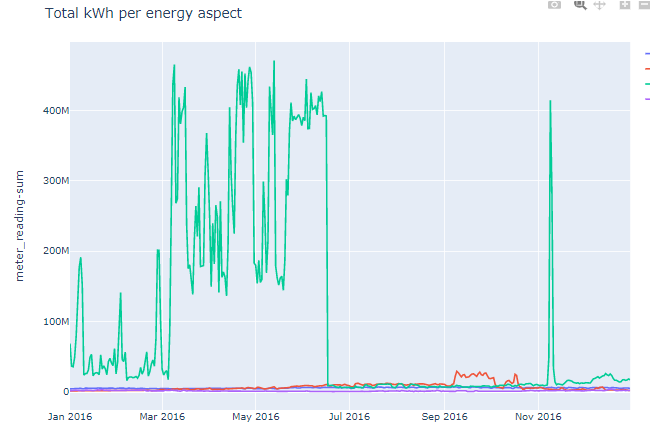

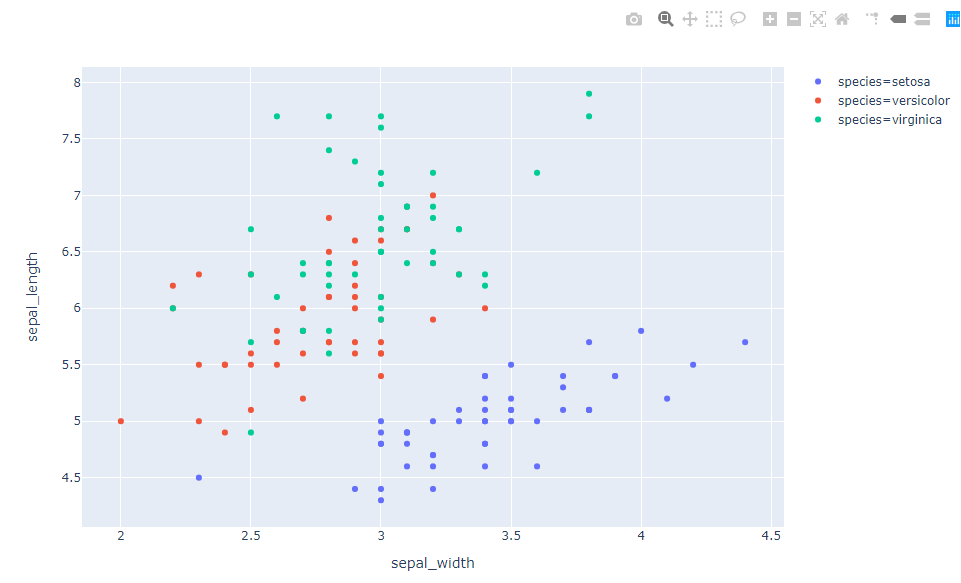

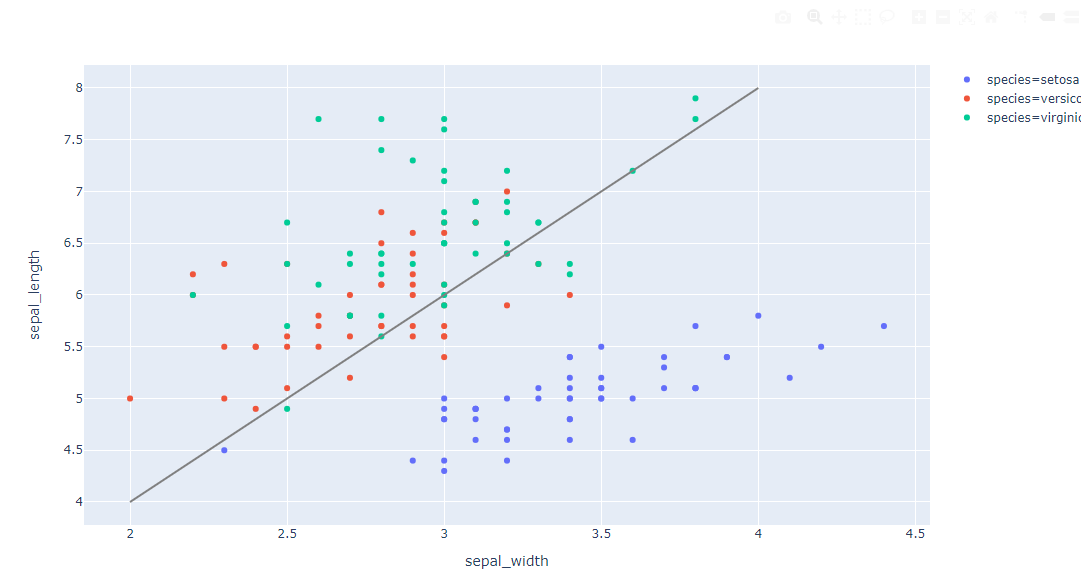

fig, axes = plt.subplots(1,1,figsize=(14, 6))

train[train['building_id'] != 1099].groupby('building_id')['meter_reading'].mean().plot();

axes.set_title('Mean meter reading by building_id', fontsize=14);

axes.set_ylabel('Mean meter reading', fontsize=14);

year_built

fig, axes = plt.subplots(1,1,figsize=(14, 6))

# インデックス(行名・列名)でソートするsort_index()

train['year_built'].value_counts(dropna=False).sort_index().plot(ax=axes).set_xlabel('year_built');

test['year_built'].value_counts(dropna=False).sort_index().plot(ax=axes).set_ylabel('Number of examples');

axes.legend(['Train', 'Test']);

axes.set_title('Number of examples per year_built', fontsize=16);

fig, axes = plt.subplots(1,1,figsize=(14, 6))

train.groupby('year_built')['meter_reading'].mean().plot().set_ylabel('Mean meter reading');

axes.set_title('Mean meter reading by year_built of the building', fontsize=16);

fig, axes = plt.subplots(2, 2, figsize=(14, 12))

sns.kdeplot(train['year_built'], ax=axes[0][0], label='Train');

sns.kdeplot(test['year_built'], ax=axes[0][0], label='Test');

sns.boxplot(x=train['year_built'], ax=axes[1][0]);

sns.boxplot(x=test['year_built'], ax=axes[1][1]);

pd.DataFrame({'train': [train['year_built'].isnull().sum()], 'test': [test['year_built'].isnull().sum()]}).plot(kind='bar', rot=0, ax=axes[0][1]);

axes[0][0].legend();

axes[0][0].set_title('Train/Test KDE distribution');

axes[0][1].set_title('Number of NaNs');

axes[1][0].set_title('Boxplot for train');

axes[1][1].set_title('Boxplot for test');

gc.collect();

floor_count(階数)

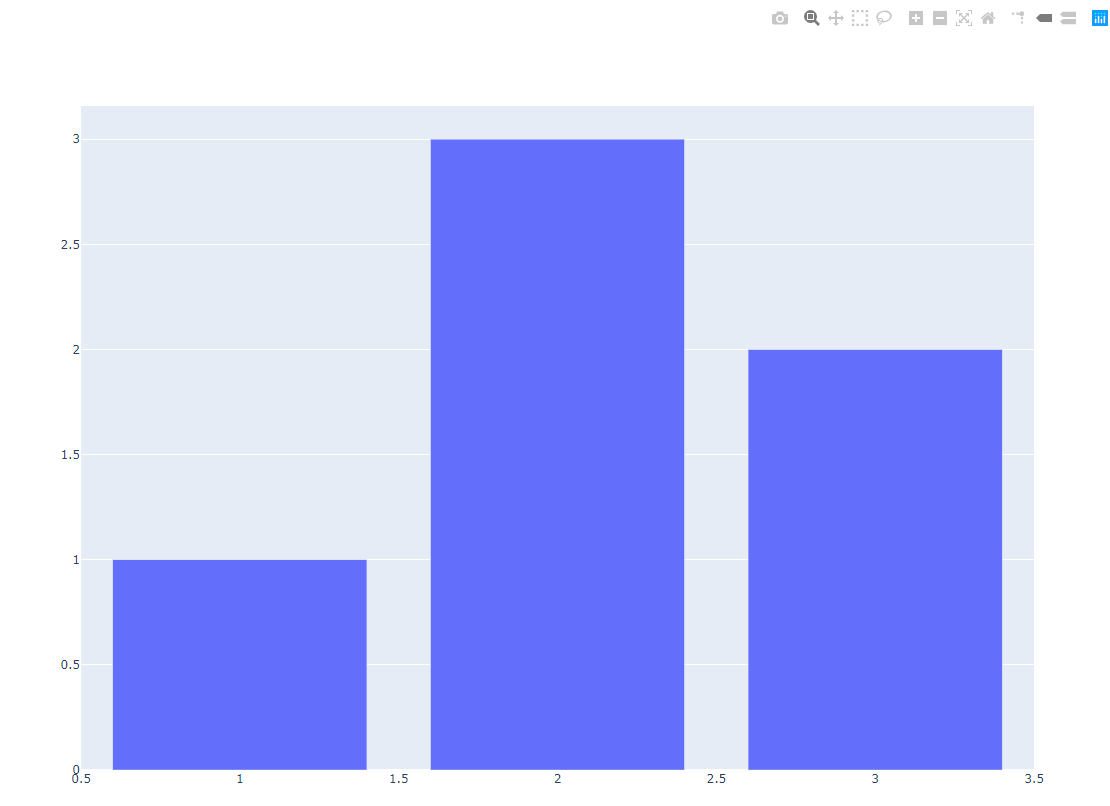

fig, axes = plt.subplots(1, 2, figsize=(14, 6))

sns.kdeplot(train['floor_count'], label='Train', ax=axes[0]);

sns.kdeplot(test['floor_count'], label='Test', ax=axes[0]);

test.index += len(train)

print(train['floor_count'].dropna().head())

print(train['floor_count'].dropna().tail())

axes[1].plot(train['floor_count'], '.', label='Train');

axes[1].plot(test['floor_count'], '.', label='Test');

test.index -= len(train)

axes[0].set_title('Train/Test KDE distribution');

axes[1].set_title('Index versus value: Train/Test distribution');

gc.collect();

fig, axes = plt.subplots(1,1,figsize=(14, 6))

pd.Series(index=train['floor_count'].value_counts().index,

data=train.groupby('floor_count')['meter_reading'].transform('mean').value_counts().index).sort_index().plot(kind='bar', rot=0, ax=axes);

axes.set_xlabel('Floor count');

axes.set_ylabel('Mean meter reading');

axes.set_title('Mean meter reading by floor count');

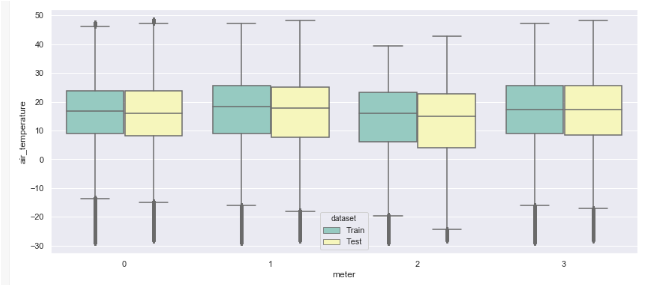

air_temperature

fig, axes = plt.subplots(1,1,figsize=(14, 6), dpi=100)

train[['timestamp', 'air_temperature']].set_index('timestamp').resample('H').mean()['air_temperature'].plot(ax=axes, alpha=0.8, label='By hour', color='tab:blue').set_ylabel('Mean temperature', fontsize=14);

test[['timestamp', 'air_temperature']].set_index('timestamp').resample('H').mean()['air_temperature'].plot(ax=axes, alpha=0.8, color='tab:blue', label='');

train[['timestamp', 'air_temperature']].set_index('timestamp').resample('D').mean()['air_temperature'].plot(ax=axes, alpha=1, label='By day', color='tab:orange');

test[['timestamp', 'air_temperature']].set_index('timestamp').resample('D').mean()['air_temperature'].plot(ax=axes, alpha=1, color='tab:orange', label='');

axes.legend();

axes.text(train['timestamp'].iloc[9000000], -3, 'Train', fontsize=16);

axes.text(test['timestamp'].iloc[29400000], 30, 'Test', fontsize=16);

axes.axvspan(test['timestamp'].min(), test['timestamp'].max(), facecolor='green', alpha=0.2);

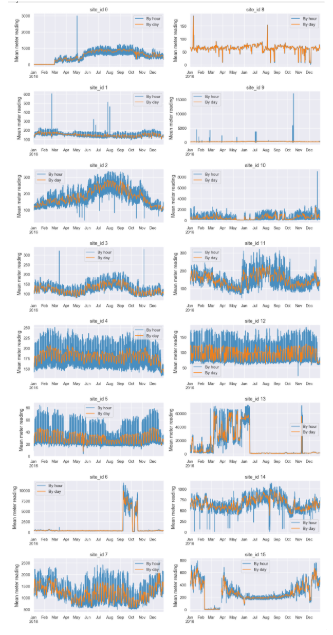

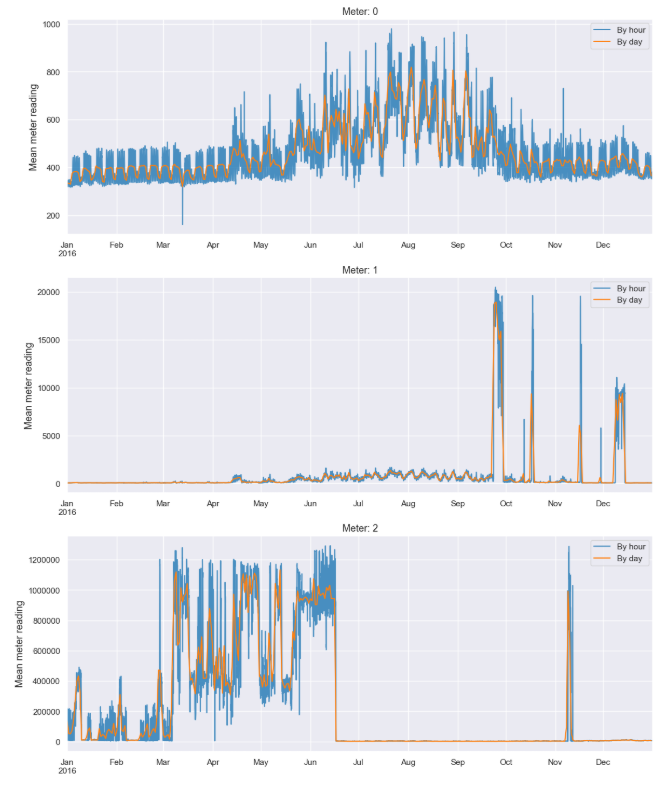

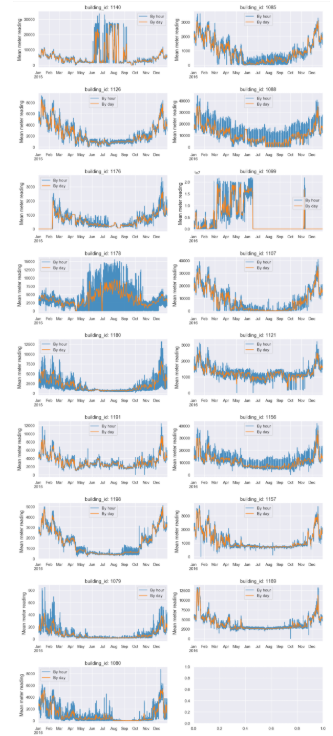

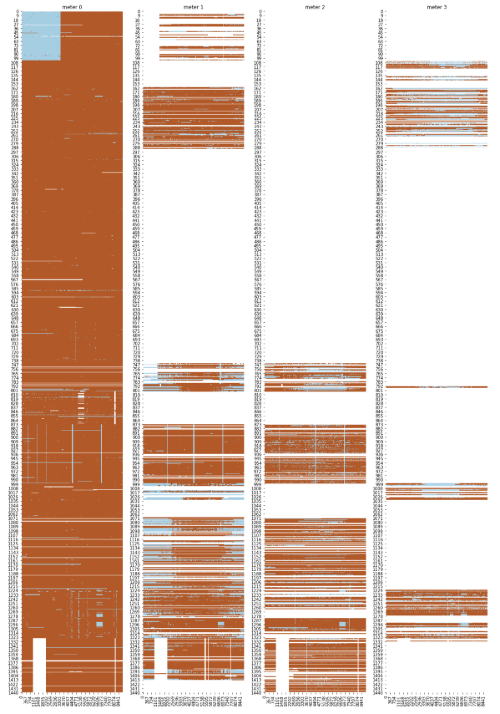

fig, axes = plt.subplots(8,2,figsize=(14, 30), dpi=100)

for i in range(train['site_id'].nunique()):

train[train['site_id'] == i][['timestamp', 'air_temperature']].set_index('timestamp').resample('H').mean()['air_temperature'].plot(ax=axes[i%8][i//8], alpha=0.8, label='By hour', color='tab:blue').set_ylabel('Mean temperature', fontsize=13);

test[test['site_id'] == i][['timestamp', 'air_temperature']].set_index('timestamp').resample('H').mean()['air_temperature'].plot(ax=axes[i%8][i//8], alpha=0.8, color='tab:blue', label='').set_xlabel('')

train[train['site_id'] == i][['timestamp', 'air_temperature']].set_index('timestamp').resample('D').mean()['air_temperature'].plot(ax=axes[i%8][i//8], alpha=1, label='By day', color='tab:orange')

test[test['site_id'] == i][['timestamp', 'air_temperature']].set_index('timestamp').resample('D').mean()['air_temperature'].plot(ax=axes[i%8][i//8], alpha=1, color='tab:orange', label='').set_xlabel('')

axes[i%8][i//8].legend();

axes[i%8][i//8].set_title('site_id {}'.format(i), fontsize=13);

axes[i%8][i//8].axvspan(test['timestamp'].min(), test['timestamp'].max(), facecolor='green', alpha=0.2);

plt.subplots_adjust(hspace=0.45)

dew_temperature

fig, axes = plt.subplots(1,1,figsize=(14, 6), dpi=100)

train[['timestamp', 'dew_temperature']].set_index('timestamp').resample('H').mean()['dew_temperature'].plot(ax=axes, alpha=0.8, label='By hour', color='tab:blue').set_ylabel('Mean dew temperature', fontsize=14);

test[['timestamp', 'dew_temperature']].set_index('timestamp').resample('H').mean()['dew_temperature'].plot(ax=axes, alpha=0.8, color='tab:blue', label='');

train[['timestamp', 'dew_temperature']].set_index('timestamp').resample('D').mean()['dew_temperature'].plot(ax=axes, alpha=1, label='By day', color='tab:orange');

test[['timestamp', 'dew_temperature']].set_index('timestamp').resample('D').mean()['dew_temperature'].plot(ax=axes, alpha=1, color='tab:orange', label='');

axes.legend();

axes.text(train['timestamp'].iloc[9000000], -5, 'Train', fontsize=16);

axes.text(test['timestamp'].iloc[29400000], 16, 'Test', fontsize=16);

axes.axvspan(test['timestamp'].min(), test['timestamp'].max(), facecolor='green', alpha=0.2);

fig, axes = plt.subplots(8,2,figsize=(14, 30), dpi=100)

for i in range(train['site_id'].nunique()):

train[train['site_id'] == i][['timestamp', 'dew_temperature']].set_index('timestamp').resample('H').mean()['dew_temperature'].plot(ax=axes[i%8][i//8], alpha=0.8, label='By hour', color='tab:blue').set_ylabel('Mean dew temperature', fontsize=13);

test[test['site_id'] == i][['timestamp', 'dew_temperature']].set_index('timestamp').resample('H').mean()['dew_temperature'].plot(ax=axes[i%8][i//8], alpha=0.8, color='tab:blue', label='').set_xlabel('')

train[train['site_id'] == i][['timestamp', 'dew_temperature']].set_index('timestamp').resample('D').mean()['dew_temperature'].plot(ax=axes[i%8][i//8], alpha=1, label='By day', color='tab:orange')

test[test['site_id'] == i][['timestamp', 'dew_temperature']].set_index('timestamp').resample('D').mean()['dew_temperature'].plot(ax=axes[i%8][i//8], alpha=1, color='tab:orange', label='').set_xlabel('')

axes[i%8][i//8].legend();

axes[i%8][i//8].set_title('site_id {}'.format(i), fontsize=13);

axes[i%8][i//8].axvspan(test['timestamp'].min(), test['timestamp'].max(), facecolor='green', alpha=0.2);

plt.subplots_adjust(hspace=0.45)

precip_depth_1_hr

fig, axes = plt.subplots(1,1,figsize=(14, 6), dpi=100)

train[['timestamp', 'precip_depth_1_hr']].set_index('timestamp').resample('M').mean()['precip_depth_1_hr'].plot(ax=axes, alpha=0.8, label='By month', color='tab:blue').set_ylabel('Mean precip_depth_1_hr', fontsize=14);

test[['timestamp', 'precip_depth_1_hr']].set_index('timestamp').resample('M').mean()['precip_depth_1_hr'].plot(ax=axes, alpha=0.8, color='tab:blue', label='');

axes.legend();

sea_level_pressure(海面圧力)

fig, axes = plt.subplots(1,1,figsize=(14, 6), dpi=100)

train[['timestamp', 'sea_level_pressure']].set_index('timestamp').resample('H').mean()['sea_level_pressure'].plot(ax=axes, alpha=0.8, label='By hour', color='tab:blue').set_ylabel('Mean sea_level_pressure', fontsize=14);

test[['timestamp', 'sea_level_pressure']].set_index('timestamp').resample('H').mean()['sea_level_pressure'].plot(ax=axes, alpha=0.8, color='tab:blue', label='');

train[['timestamp', 'sea_level_pressure']].set_index('timestamp').resample('D').mean()['sea_level_pressure'].plot(ax=axes, alpha=1, label='By day', color='tab:orange');

test[['timestamp', 'sea_level_pressure']].set_index('timestamp').resample('D').mean()['sea_level_pressure'].plot(ax=axes, alpha=1, color='tab:orange', label='');

axes.legend();

axes.text(train['timestamp'].iloc[9000000], 1004, 'Train', fontsize=16);

axes.text(test['timestamp'].iloc[21000000], 1032, 'Test', fontsize=16);

axes.axvspan(test['timestamp'].min(), test['timestamp'].max(), facecolor='green', alpha=0.2);

fig, axes = plt.subplots(8,2,figsize=(14, 30), dpi=100)

for i in range(train['site_id'].nunique()):

train[train['site_id'] == i][['timestamp', 'sea_level_pressure']].set_index('timestamp').resample('H').mean()['sea_level_pressure'].plot(ax=axes[i%8][i//8], alpha=0.8, label='By hour', color='tab:blue').set_ylabel('Mean sea_level_pressure', fontsize=13);

test[test['site_id'] == i][['timestamp', 'sea_level_pressure']].set_index('timestamp').resample('H').mean()['sea_level_pressure'].plot(ax=axes[i%8][i//8], alpha=0.8, color='tab:blue', label='').set_xlabel('')

train[train['site_id'] == i][['timestamp', 'sea_level_pressure']].set_index('timestamp').resample('D').mean()['sea_level_pressure'].plot(ax=axes[i%8][i//8], alpha=1, label='By day', color='tab:orange')

test[test['site_id'] == i][['timestamp', 'sea_level_pressure']].set_index('timestamp').resample('D').mean()['sea_level_pressure'].plot(ax=axes[i%8][i//8], alpha=1, color='tab:orange', label='').set_xlabel('')

axes[i%8][i//8].legend();

axes[i%8][i//8].set_title('site_id {}'.format(i), fontsize=13);

axes[i%8][i//8].axvspan(test['timestamp'].min(), test['timestamp'].max(), facecolor='green', alpha=0.2);

plt.subplots_adjust(hspace=0.45)

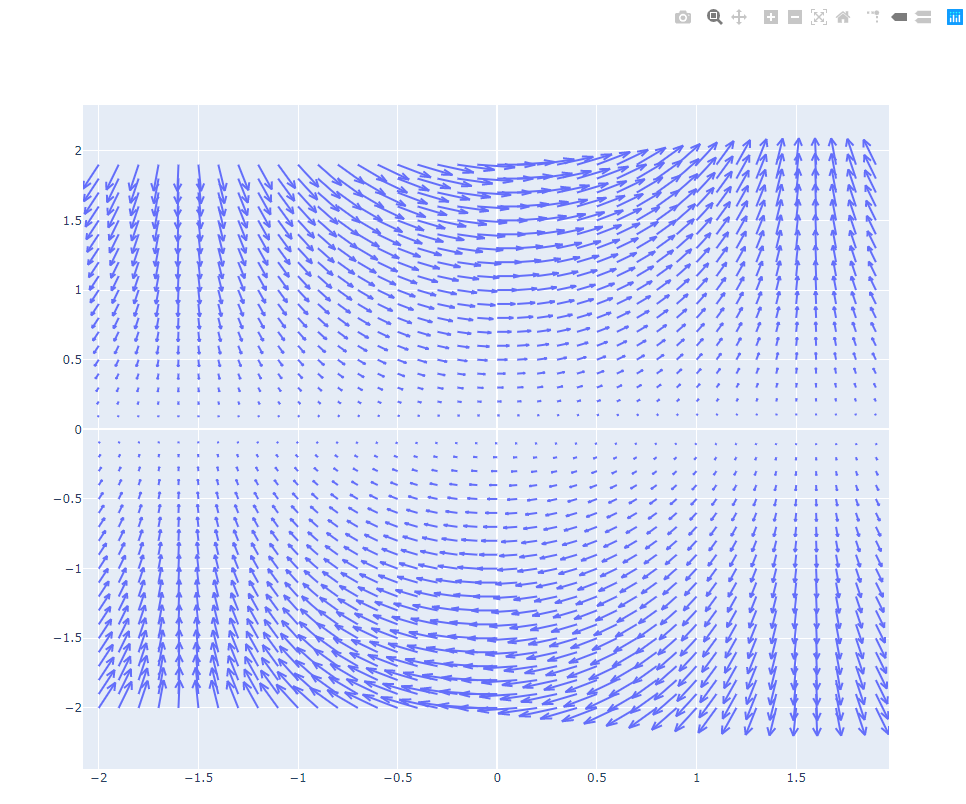

wind_direction & wind_speed(風向と風速)

def speed_labels(bins:list, units:str) -> list:

labels = list()

for left, right in zip(bins[:-1], bins[1:]):

if left == bins[0]:

labels.append('calm'.format(right))

elif np.isinf(right):

labels.append('>{} {}'.format(left, units))

else:

labels.append('{} - {} {}'.format(left, right, units))

return labels

def _convert_dir(directions, N=None):

if N is None:

N = directions.shape[0]

barDir = directions * np.pi/180. - np.pi/N

barWidth = 2 * np.pi / N

return barDir, barWidth

spd_bins = [-1, 0, 5, 10, 15, 20, 25, 30, np.inf]

spd_labels = speed_labels(spd_bins, units='m/s')

dir_bins = np.arange(-7.5, 370, 15)

dir_labels = (dir_bins[:-1] + dir_bins[1:]) / 2

calm_count = train[train['wind_speed'] == 0].shape[0]

total_count = len(train)

rose = (train.assign(WindSpd_bins=lambda df:

pd.cut(df['wind_speed'], bins=spd_bins, labels=spd_labels, right=True)).assign(WindDir_bins=lambda df: pd.cut(df['wind_direction'], bins=dir_bins, labels=dir_labels, right=False)).replace({'WindDir_bins': {360: 0}}).groupby(by=['WindSpd_bins', 'WindDir_bins']).size().unstack(level='WindSpd_bins').fillna(0).assign(calm=lambda df: calm_count / df.shape[0]).sort_index(axis=1).applymap(lambda x: x / total_count * 100))

rose.drop(rose.index[0], inplace=True)

directions = np.arange(0, 360, 15)

def wind_rose(rosedata, wind_dirs, palette=None):

if palette is None:

palette = sns.color_palette('inferno', n_colors=rosedata.shape[1])

bar_dir, bar_width = _convert_dir(wind_dirs)

fig, ax = plt.subplots(figsize=(10, 10), subplot_kw=dict(polar=True))

ax.set_theta_direction('clockwise')

ax.set_theta_zero_location('N')

for n, (c1, c2) in enumerate(zip(rosedata.columns[:-1], rosedata.columns[1:])):

if n == 0:

# first column only

ax.bar(bar_dir, rosedata[c1].values,

width=bar_width,

color=palette[0],

edgecolor='none',

label=c1,

linewidth=0)

# all other columns

ax.bar(bar_dir, rosedata[c2].values,

width=bar_width,

bottom=rosedata.cumsum(axis=1)[c1].values,

color=palette[n+1],

edgecolor='none',

label=c2,

linewidth=0)

leg = ax.legend(loc=(0.75, 0.95), ncol=2)

xtl = ax.set_xticklabels(['N', 'NE', 'E', 'SE', 'S', 'SW', 'W', 'NW'])

return fig

fig = wind_rose(rose, directions)